One of my recent projects has been to build out a solution for one of our applications that gathers data from a network. Right now it runs as a vm on esx or ovirt and will gather data all day and night, it’s fantastic.

This, however, was a problem for clients that don’t have vm hosts containing enough system resources to put our vm on it, or better yet, no vm host at all!

In comes this service-in-a-can idea, that is, can we transplant our vm into a SBC (Small-Board Computer) and have it work in a more autonomous manner, gathering data wherever we put it, however we deploy it.

Our vm utilizes docker containers to compartmentalize services, whether it be a system that checks popular websites for responsiveness or a container that hosts icinga for host checks. So, I opted to spec out the vm for 2 vCPU and 4GB of ram, more than enough to support a system that still doesn’t know what else is going to be on it. My goal was to be able to support anything the development leads wanted to put on it down the line without having to think about upsetting the system resources currently available to it. The worst thing that could have happened is we sold clients vm servers that would support this specific vm, and then have to upgrade ram later on to support further functionality upgrades.

The Hardware

After a fair amount of searching while keeping in mind that a cheaper alternative was more likely to gain traction and open doors with clients, I opted to go with a Rock64 4GB RAM unit. Along with the board I got the following

- Rock64 4G SBC – $44.95 USD

- 5V 3A Power Supply – $6.99 USD

- Rock64 Aluminum Casing – $12.99 USD

- 32GB eMMC Module – $24.95 USD

All In for $89.88, slick right?

Note: After completing the project, I would have dropped the eMMC module in favor of a 64GB SDXC micro-sd card.

Researching CentOS on Rock64

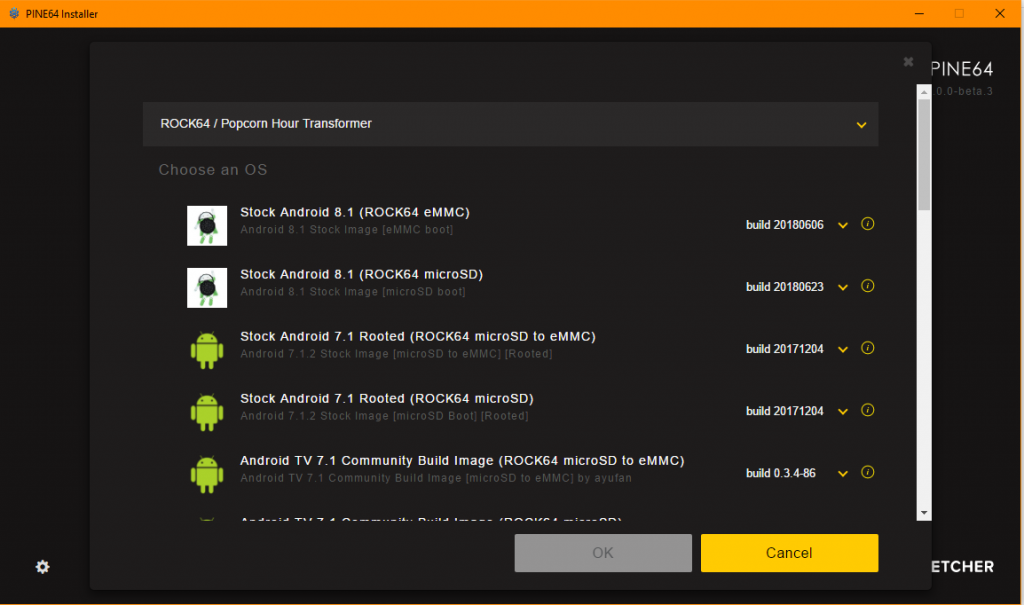

If you’ve found my post, you’ll know that there is LITTLE out there when you compare CentOS to say, debian, or ubuntu. Heck, the PINE64 Installer itself, which allows you to easily flash a SD card with an operating system only has Debian, Ubuntu, armbian or android OSes for the Rock64.

It’s a slick tool but I was a little taken aback by the lack of non-debian distros, was running CentOS on ARM that hard? There is a SIG dedicated to both <ARMv7 and >ARMv8, and current releases with the normal x86 variants.

Doing some google searching I found the following in addition to the pine64.org forum post asking about CentOS support for the pine boards (I assume the rock64 just uses a newer chip but otherwise is the same).

At first glance it looks promising, but having been through their steps it won’t work. Something about the Rock64 that I never dug into would never let the board boot. If there was an easy way to do a serial to the unit I would have been able to get a better understanding of what the issue was but I ended up taking a different route (although I borrowed some of the thoughts in the document).

Digging into the distributions that came with the PINE64 installer, I found the github for the maintainer:

https://github.com/ayufan-rock64/linux-build

Admittedly, I used the Step 2 from umiddelb’s document, I kept /boot, /lib/modules and /lib/firmware but ended up using the last build AArch64 from CentOS, which happens to be 7.4.1708.

Setup Steps

The following is the step-by-step that I took from my Fedora based system to get a SD card setup and running with the stretch-minimal-rock64-0.7.11-1075 image from ayufan, and the CentOS-7-aarch64-rootfs-7.4.1708 tarball from one of the many CentOS 7 vaults (I used Tripadvisor’s).

Download your rock64 image, based on stretch as well as the most recent Cent 7 rootfs tarball.

curl -sSL -o CentOS-7-aarch64-rootfs-7.4.1708.tar.xz https://mirrors.tripadvisor.com/centos-vault/altarch/7.4.1708/isos/aarch64/CentOS-7-aarch64-rootfs-7.4.1708.tar.xzcurl -sSL -o stretch-minimal-rock64-0.7.11-1075-arm64.img.xz https://github.com/ayufan-rock64/linux-build/releases/download/0.7.11/stretch-minimal-rock64-0.7.11-1075-arm64.img.xz

Unpack the image (we’ll extract the tarball right into the SD card later.

unxzstretch-minimal-rock64-0.7.11-1075-arm64.img.xz

Find your sd card if you haven’t already (make sure its unmounted), and write the image to the disk.

dd bs=1M if=stretch-minimal-rock64-0.7.11-1075-arm64.img of=/dev/mmcblk0 Note: You will need to expand out the root partition by a few GB, the debian based partitioning scheme is smaller than what we need (since I keep all of the debian folders). If you were getting rid of everything aside from /boot, /lib/modules and /lib/firmware there should be enough space when extracting the CentOS rootfs.

parted /dev/mmcblk0 resizepart 7 10240 # expand it out 10GB

resize2fs /dev/mmcblk0p7

sync

Mount the card, I chose /mnt/sd-rock64, and move the folders and files around.

mount /dev/mmcblk0p7 /mnt/sd-rock64

cd /mnt/sd-rock64

mkdir debian-src

mv * debian-src/

tar --numeric-owner -xpJf /mnt/CentOS-7-aarch64-rootfs-7.4.1708.tar.xz -C .

# I move the centos folders just because im a pack rat, feel free to remove them.

mv boot boot.centos

mv lib/firmware lib/firmware.centos

cp -R debian-src/boot/ .

cp -R debian-src/lib/firmware lib/

cp -R debian-src/lib/modules lib/

Use blkid to get the UUID of mmcblk0p7, add an etc/fstab with the root partition defined.

echo'UUID=ccdc39df-5dee-41fb-8f85-c68ee54dcd94 / ext4 defaults 0 0'>> etc/fstab

Lastly, change the root password hash in etc/shadow, I never could find what it was supposed to be set as, some people said “centos” but it never worked and this is just easier.

openssl passwd -1

Go ahead, unmount the root partition and stick the SD card into your Rock64, it should boot up. Looking at my # time through these steps, I don’t see any glaring errors, or at least haven’t come across anything yet that was a show stopper.

Final Thoughts

My setup may not be what you need, or have a vision for, but my hope was to provide a more verbose setup method for those that may come across this.

In the end I probably won’t be using the rock64 unit for my specific application, as it turns out some of the vendors we use for some of the services that run in containers don’t supply binaries that are compiled for ARM cpus. I’m looking at you Icinga, I don’t really want to sit here for an hour waiting for it to compile just to figure out that the permissions are wrong or something didn’t compile just right.

Hopefully your build works out, the Rock64 is an amazing piece of technology for under $100 bucks, so it will be the top of list down the line if I can look at arm based systems.